Covering Climate Change: A Sentiment Analysis of Major Newspaper Articles from 2010 - 2020

By

2021, Vol. 13 No. 09 | pg. 1/1

IN THIS ARTICLE

KEYWORDS

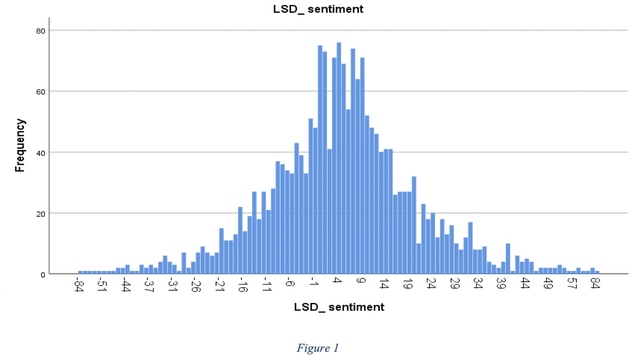

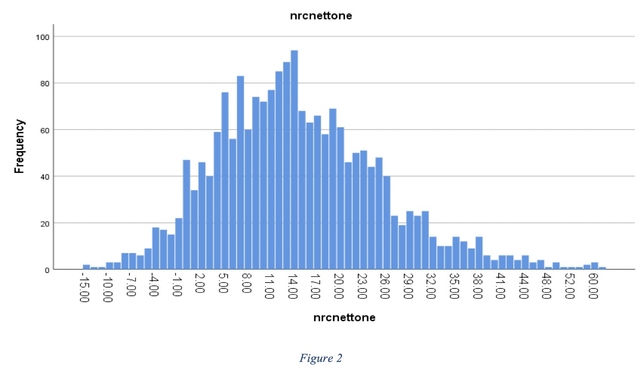

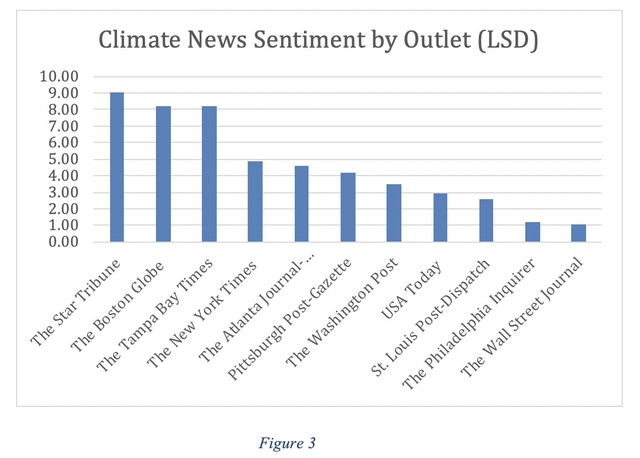

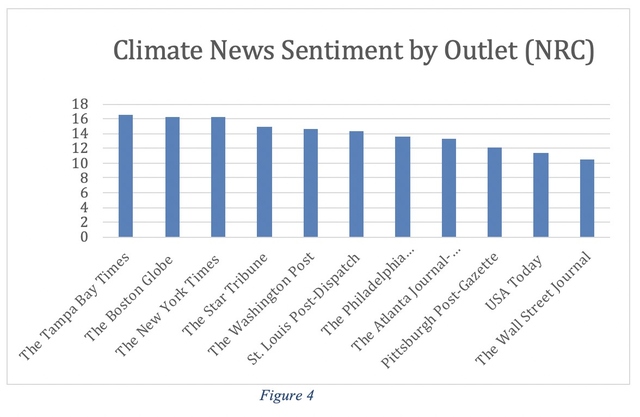

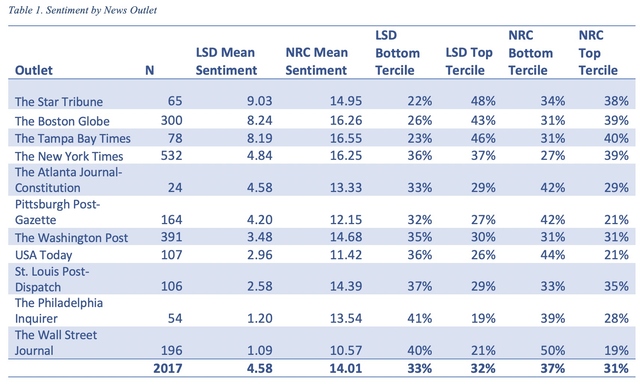

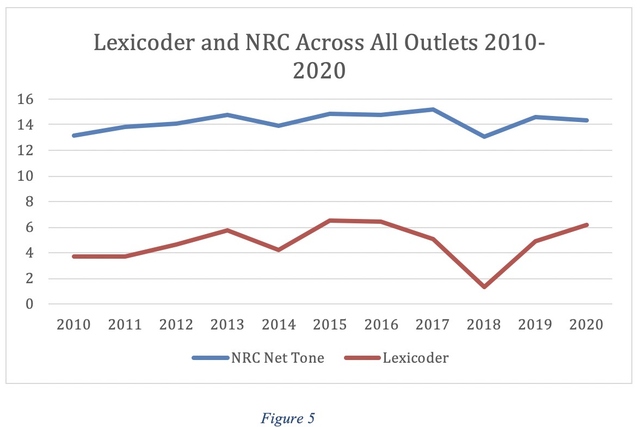

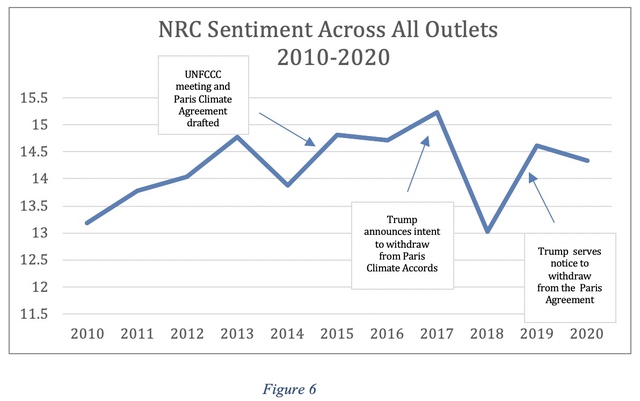

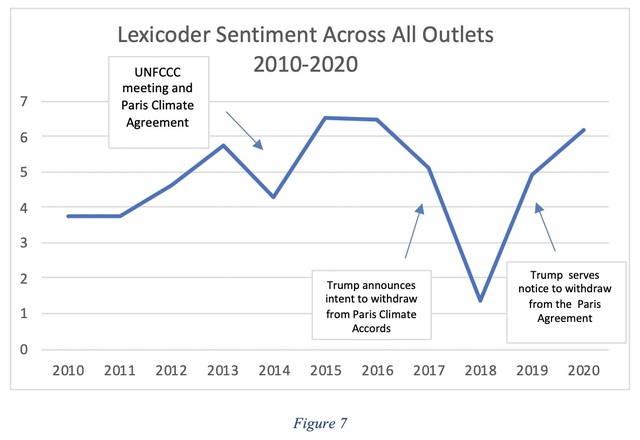

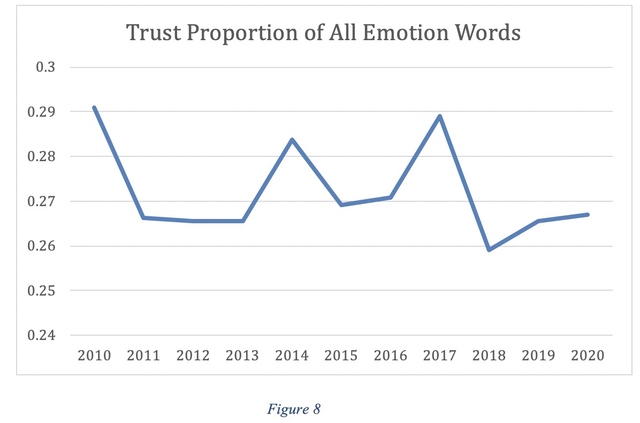

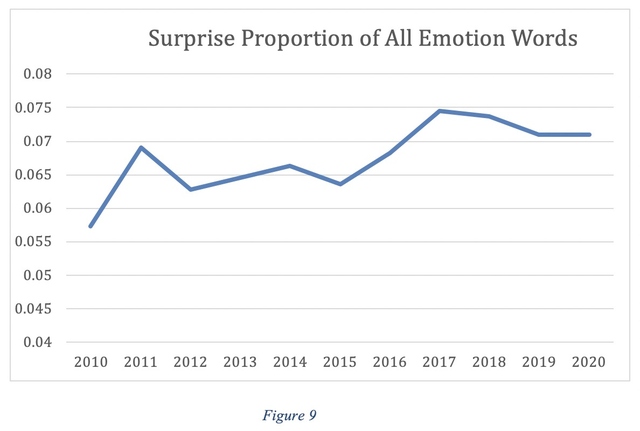

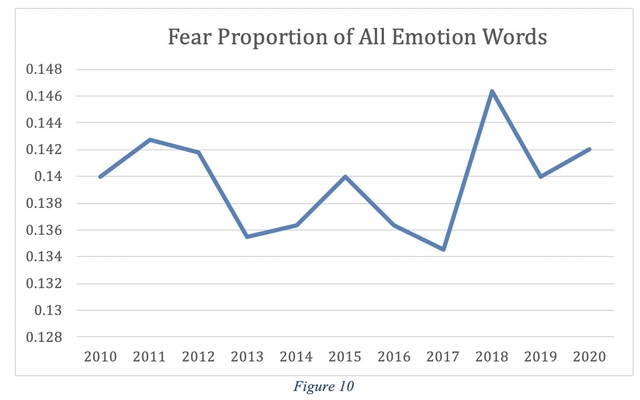

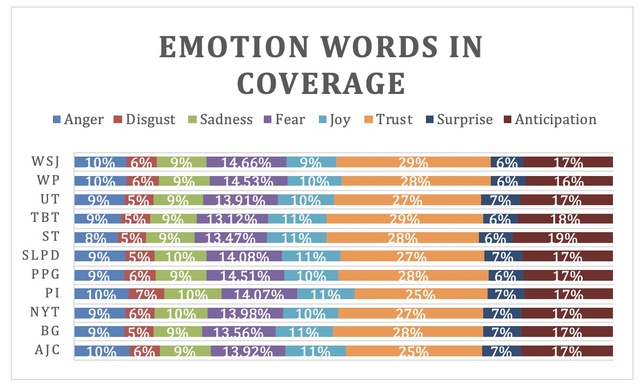

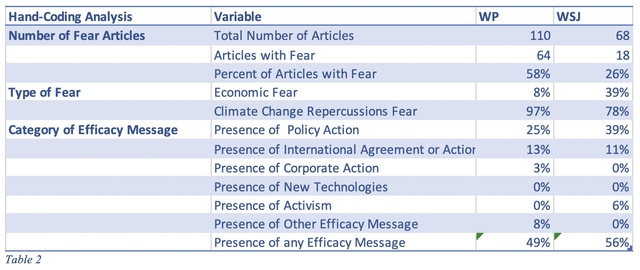

AbstractThis research lies at the nexus of political communication theory relating to emotional affect and political processing and the burgeoning field of sentiment analysis. News coverage can affect opinion both through the information it provides and the emotional reaction it generates. To better understand the affective content of news coverage of climate change, we mobilized two dictionary tools to perform an unsupervised sentiment analysis. Using 2,017 articles about climate change from 11 major U.S. newspapers between 2010-2020, this study assesses both the valence and top eight basic emotions found in the news articles. Building from political communication literature surrounding fear as a demobilizing force in climate change communication, this research also evaluates news coverage that features fear as a discrete emotion to understand whether other features of such articles might offset any demobilizing tendency of fear in news coverage. We find variation in the sentiment and emotion of news coverage across outlets and overtime, potentially responding to events and changes in understanding of the issue. We also find that roughly half the articles that include fear surrounding climate change also include efficacy promoting messages. This has implications both for dictionary tools in the analysis of sentiment as well as the nature of climate change coverage. IntroductionClimate change is an existential threat, endangering the habitability of the Earth for future generations. Despite the near-universal consensus (93 percent)1 among scientists that climate change has anthropogenic roots, a 2016 Pew poll found fewer than half of all Americans believe that climate change is due to human activity. More concerning, 20 percent of Americans believe that there is no evidence of climate change. 2 Public opinion surrounding the need for climate change mitigation has increased significantly over the past decade — in 2010, just under 30 percent of U.S. adults say dealing with global climate change should be a priority for the president and congress, compared with 52 percent in 2020.3 However, this is still barely more than half the population. The divergence between scientists and the American public – and the lack of comprehensive climate change mitigation policy at the national level leads us to question what types of messages we are consuming surrounding climate change which may be leading to opinion formation. Additionally, political communication literature has demonstrated the dynamic relationship between the emotional tagging of political and policy issues and the processing and formation of beliefs. News coverage is a major source of the information we use to create these emotional tags. News media is tasked with reporting and reducing the world to a more tangible scale and often relies on a few words to “…stand for a whole succession of acts, thoughts, feelings and consequences.” Moreover, “the words we read to evoke form the biggest part of the original data of our opinions.” 4 Word choice, framing, and language are consequential in how we process political information and form the pictures in our heads.5 Furthermore, these conceptualizations are tagged with emotions — emotion that political communication theory 6 tells us we effectively link to the idea or issue. And when prompted, we respond habitually, turning to prior emotional tags. Therefore, this research is interested in investigating the types of emotion and sentiment we are consuming in climate change news coverage – which influences information processing and formation of opinions.Additionally, we do not assume that positive sentiment surrounding climate change translates to support for climate change mitigation policy. The sentiment of coverage both reflects events, policy directions, public opinion and changes in understanding of the issue. In turn, it also informs public opinion, policy, and understanding of the issue. We can view sentiment as an amalgamation of these measures. Beyond sentiment, it is worthwhile to evaluate further the specific emotions – such as anger, fear, disgust, trust, surprise, anticipation, and joy — that are being invoked in coverage. Furthermore, climate change communication scholarship has demonstrated that discrete emotions (such as fear and hope) play an important role in determining support for climate change mitigation policy. To assess the sentiment and emotion present in the language used to cover climate change in mainstream U.S. news outlets, this research utilizes two dictionary tools to perform a large-scale sentiment analysis. The field of sentiment analysis (SA), also referred to as opinion mining (OM) or emotional polarity computation, serves to assess whether language surrounding an issue or topic is positive, negative, or neutral. (See the Methods section for a discussion of the specific dictionary tools). These tools are subsections of the larger, burgeoning field of text mining which transforms unstructured textual information into data that can be analyzed. These tools have been applied for marketing purposes (to opinion mine) and often in academia, to assess the sentiment of individual social media activity. However, this research instead uses dictionary tools to look at the emotion and sentiment conveyed in news — which scholarship tells us — influence readers’ information processing and opinion formation. This work does not presume that a newspaper’s phrasing and language are consciously chosen with an intent to inflict a certain political outcome or support a partisan view of an issue (opinion pieces, aside). However, words carry emotional attachments, and decisions surrounding what to include (and exclude), indicate that the reporting process is intrinsically human and transmits emotion and affect in providing information and covering issues. The selection of words, specifically words with high emotional affect, can influence a reader’s political and emotional processing of a subject. This research proposes that the general sentiment and as well as discrete emotions conveyed by newspapers’ climate change coverage will be slightly different for each paper – depending on the ideological makeup of their audience — and evolve over time to reflect world climate events and public perception. Literature ReviewThree hallmark theories in political communication attempt to explain how we process political information. The first is that social concepts which have been evaluated in the past are affect-laden and positive or negatively charged.7 Referred to as the hot cognition hypothesis,8 this postulate places emotions at the forefront of how humans process information and form opinions surrounding political leaders, groups, and issues.9 Moreover, the affect individuals assign to an idea is not static. Drawing from social psychology research10 scholars have developed the theory of on-line processing—the idea that the evaluative affect attached to concepts in memory is updated upon exposure to new information about the memory object.11 Thirdly, psychology literature,12/13 has established that feelings are the first factor to inform political decisions, before conscious cognitive evaluation, known as the primacy of affect.14 Taken together, motivated reasoning theory tells us that individuals are more likely to accept information consistent with our predispositions — or consistent with our current affective tag about an individual. In other words, reinforcing information will be accepted without scrutiny but individuals will engage in motivated reasoning when they encounter information counter to their predispositions. Based on the theory of motivated reasoning, Redlawsk finds that while affective biases should lead citizens to spend more time processing information that does not align with their prior affect, instead they often double down and increase support of their prior belief. This bolstering effect of our affect driven processing may lead to lower quality decision making.15 Building on the research investigating the implications of motivated political processing, in 2006 Taber and Lodge conducted two experiments in which they find evidence of both a confirmation and disconfirmation bias in information processing. Participants were found to counter-argue contrary arguments, uncritically accept supporting arguments, and even seek out confirmation evidence when given the chance to select sources. Both biases lead to attitude polarization,16 and have implications for the United States where two insular, asymmetric, ideological media systems have formed, leading to separate spheres informing citizens. 17 This scholarship at the intersection of political communication and social psychology illustrates how emotions play an important role in the processing of political information. Demonstrating this, Taber and Lodge argue: “Feelings toward the candidates on election eve may be the most powerful and useful information available to citizens, making truly dispassionate deliberation neither possible nor desirable.” Additionally, the Affective Intelligence Theory model suggests that emotion has a real influence on how we process information and develop our views. The model posits that individuals have two separate systems for decision making. The first system is the disposition system which guides behaviors without people consciously considering them (this is popular with political party heuristics). The other system is the surveillance system, which is invoked when people experience unfamiliarity, fear, or anxiety and it opens us individuals to actively search for new information. 18 The surveillance system may be activated through climate change coverage given the levels of fear we detect in our research. Climate Change Coverage and Policy ImplicationsAs we grapple with climate change, it is important to evaluate news messages since “[e]lite news media critically contribute to public discourse and policy priorities through their “mediating” and interpretative influences.”19 In other words, news is critical to creating the emotional tag we place on political and policy issues. Public understanding of climate change, whether climate is seen as an important problem, and support for various mitigation efforts are all likely to be affected by news coverage of climate change. Additionally, “[m]ass-media coverage of climate change is not simply a random amalgam of newspaper articles and television segments; rather, it is a social relationship between scientists, policy actors and the public that is mediated by such news packages.” 20 To understand the dynamic relationship between mass media and climate change policy in the U.S., it is first essential to understand how journalistic norms have influenced the coverage of climate change, and by extension, public opinion. Most notably ‘false balance’ has led to biased coverage of the anthropogenic causes of climate change as well as the policy responses.21 Moreover, false balance has contributed to a “significant divergence of popular discourse from scientific discourse” in the public’s understanding of climate change. 22 Additionally, early research has shown climate change coverage to be cyclical by nature. Specifically, the timing and frequency of climate change coverage is heavily dependent upon weather events: “Thus, public attentiveness to environmental issues increases when those issues piggyback on actual events that force this issue into public consciousness.” This may be partially responsible for the lack of support for climate change mitigation policy, since holding the public’s interest in the issue is important to finding and implanting solutions. 23 Given partisan differences in views on climate change,24 one might expect that coverage of this issue could change with partisan shifts in power. However, in a 2009 conference paper, Soroka, Farnsworth, Young, and Lawlor found that coverage did not significantly change following partisan shifts in power. 25 However, this finding may no longer hold true given that their study was conducted from 1999 – 2009 and polarization began to rapidly increase in 2007,26 leading to the development of two, isolate media systems. 27 Hart, Nisbet, and Myers also conducted an analysis assessing the role of political ideology, finding that while political orientation does impact the reception of climate change messages, “increasing risk perceptions may dampen political polarization and lead to greater policy support across the political spectrum.” 28 However, in a more recent automated computerized content analysis of all climate change articles from major newspapers in the United States from 1985-2017, Chinn, Hart, and Soroka found that media representations of climate change have become both increasingly polarized and politicized.29 Much of the scholarship in the realm of climate change and emotion is geared toward messaging strategy and investigating best practices to generate support for climate change mitigation policies. For example, in their 2011 study, Hart and Nisbet examined how altering the perception of who is impacted by climate change interacts with party affiliation to heighten political polarization in response to potential climate mitigation policies. They found that “neither factual knowledge about climate change nor general scientific knowledge was associated with support for climate change policy,” emphasizing the function of motivated reasoning and embedded social identity cues in the processing of scientific messages. 30 Smith and Leiserowitz’s 2013 experiment also indicates the outsized role of emotion in the processing of climate change messages. They asked 1,000 individuals to rate the intensity of the emotions they experienced when promoted to think about climate change and rated support for a range of policies designed to mitigate climate change ranging from cap and trade to increasing the cost of gasoline. Smith and Leiserowitz found that emotions accounted for 50 percent of the variance in their support for climate policies. Moreover, emotions were the strongest predictors, surpassing political party or ideology, cultural worldviews, image associations, or sociodemographic variables. 31 However, in a strategic assessment of the role of climate change communication, Chapman, Lickel, and Markowitz, push back on Smith and Leiserowitz’s findings, saying it “rests on correlational evidence.” They caution against focusing on affect because “short-term affective impacts of particular messages are not indicative of meaningful behavioral responses, nor can they assume that an immediate emotional response will persist or have consistent effects over time.”32 Despite Chapman, Lickel, and Markowitz’s concerns, many scholars find evidence of emotion playing a significant role in how individuals perceive climate change. For example, a 2009 study found that while negative, sensationalistic emotions capture an individual’s attention, they do not necessarily lead to action and can instead make people feel overwhelmed. 33 A 2011 study assessed the coverage of three major cable news channels (Fox News, CNN, and MSNBC) and the relationship between viewership and beliefs about climate change. Researchers found that Fox takes a more dismissive tone toward climate change than the other two networks and that there was a “negative association between Fox News viewership and acceptance of global warming, even after controlling for numerous potential confounding factors. On the other hand, “viewing CNN and MSNBC is associated with greater acceptance of global warming.”34 Another 2011 study attempted to differentiate how science/environment news versus political news impacted public risk perceptions of climate change as well as policy support for mitigation efforts. Researchers found unsurprisingly that science-based news leads to higher risk perceptions and more accurate beliefs as opposed to political news. The Role of Discrete Emotions in Climate Change Information ProcessingDiscrete emotions have been shown to guide processing of information and even predict behavior: “According to cognitive appraisal theory and functional approaches to emotion, specific emotions guide behavior based on the relationship they signal between individuals and their environment, which triggers a particular action tendency in order to cope with the emotion.”35 Specifically in the realm of climate change information, Smith and Leiserowitz have demonstrated that worry and hope are key indicators in whether individuals will support policy action to mitigate climate change.36 This idea is echoed in a 2018 study from Nabi, Gustafson, and Jensen, demonstrating the importance of emotion, specifically hope, “as a key mediator between gain-framed messages and desired climate change policy attitudes and advocacy.37 On the other hand, a 2016 experiment by Hornsey and Fielding found that “emotional distress is strongly correlated with mitigation motivation; hope is not.” They also note that optimistic messages lead to lower climate risk perceptions, in turn, lowering mitigation motivation. From a strategic standpoint, Hornsey and Fielding find pessimistic climate change messaging to be a more effective method than hope in promoting efficacy while avoiding complacency.38 Across the literature, fear and hope are consistently key emotions in climate change news processing but the factor that determines whether one is more successful at promoting support for climate change mitigation may be the level or balance of the two emotions in news content. For example, if hope levels are too high, it may lead individuals to feel as if they do not need to take action whereas if fear messages are too strong, individuals may feel demobilized and be less receptive to mitigation strategies. Furthermore, this idea of a balanced emotional mix which promotes support for climate change mitigation policies, likely differs for individuals. In my preliminary research, fear was consistently the highest negative emotion detected, and the second-highest emotion detected, trust being the first. Fear-based messages are unavoidable in news coverage of climate change. Research surrounding fear specifically in climate change communication yields varying responses in participants – with individuals in some cases demonstrating a preference for fear-based messages while other studies have shown fear to distance the public from the threat.39 Smith and Leiserowitz suggest that “the limited success of global warming fear appeals may also be attributable to a feeling of personal invulnerability combined with the belief that individual or collective action either is too difficult or would not make a difference.” 40 Limited research has found fear-based messages to be preferred by individuals41 while other studies have shown fear messages to be demobilizing, distancing individuals from the issue.42 One way to mitigate the potentially demobilizing response to high levels of fear-based messaging is to also include messages that increase one’s self-efficacy, such as an example of something that is being done to address climate change. Messages that evoke individual efficacy have shown to be integral to increasing climate activism across studies.43 “[E]fficacy messages – by portraying climate change as an addressable threat – may diminish fear… [I]n a fear-based message appeal, the inclusion of efficacy information encourages people to cognitively confront the perceived danger, thereby alleviating fear, and in turn, motivates them to take action to alleviate the danger.”44 However, efficacy messages may not be common in climate change coverage. Boykoff and Boykoff argue that first and second-order journalism norms (personalization, dramatization, novelty, authority, order, and balance) have led to an “‘episodic framing’ of [climate] news – rather than ‘thematic framing’ whereby stories are situated in a larger, thematic context – and this has been shown to lead to shallower understandings of political and social issues.” Episodic coverage tends to focus on the dramatic details of a single event rather than the larger background – possibly leading to high levels of fear. This is a potential explanation for why news does not accommodate more messages that would promote an individual’s self-efficacy. Given the likelihood of the presence of fear in news stories about climate change, this research is also interested in assessing when fear is being evoked and determining whether messages that promote self-efficacy are also present. Developing Tools for Sentiment AnalysisDue to the centrality of emotion in processing climate change messages and the dynamic relationship between public opinion and news media, it is relevant to explore the types of emotionally charged language the public consumes surrounding climate change. Since it is not typically feasible to analyze thousands or of texts for tone, language, or policy frames, researchers have developed a number of methods for automated content analysis. The two main methods are supervised machine learning and unsupervised machine learning, the latter of which includes dictionary-based approaches.45 In one of the first attempts to perform a dictionary-based computerized text analysis, Tausczik and Pennebaker developed a Linguistic Inquiry and Word count (LWIC) program to calculate the percentage of words in psychologically meaningful categories.46 Similarly, in 2010, a group of researchers developed their own algorithm, SentiStrength, to extract sentiment from online English texts. Applying the algorithm to Myspace comments, SentiStrength was able to predict positive and negative emotion with more than 60 percent accuracy. 47 Building upon the work of these scholars, in 2012, Young and Soroka made the first attempt to build a dictionary specifically for the study of political communication. They evaluated New York Times coverage of four major topics using a valence dictionary they developed through combining three existing lexical resources.48 Further exploring the work of other academics regarding the prevalence of negativity in news,49/50 Young and Soroka along with Balmas, performed a sentiment analysis to assess the nature of negativity, fear, and anger in news content. Using fifty-five thousand front-page news stories, the researchers demonstrated that fear and anger are distinct sentiments and can be measured as such. Since prior research showed that fear and anger produce differing political attitudes and behavior, their research is significant. 51 In 2016, Haselmayer and Jenny, recognizing that most of the automated sentiment analysis tools were limited to English texts, created a crowd-coding procedure to expand negative sentiment dictionaries to other languages.52 Following Haselmayer and Jenny’s work, others worked to develop a German sentiment dictionary53 as well as an automated translatable sentiment dictionary.54 Content and sentiment analyses used to capture the tone of news coverage have also been applied to tracking policy issues and frames in media. Given the importance of accurate information in promoting public responsiveness55 and since “[t]he average citizen” learns about policy from the mass media and does not experience government policy directly, there is a need to study media coverage of public policy. Soroka and Wlezien propose a method for tracking coverage of policy changes in mass media, with specific attention paid to whether media content is reflective of policy directions. Through a dictionary-based content analysis of defense spending coverage, they find strong cues about policy changes in the media. They also note that the opinion-policy link is dependent not only on the volume of coverage, but on its accuracy. 56 Soroka and Wlezien’s work informs our evaluation of how climate change coverage fluctuates over time in response to policy directions — though we do not attempt to make connections to the volume or accuracy of coverage. Building upon their work analyzing coverage of defense spending, Soroka, Wlezien, along with Neuner, investigate not just the presence of policy cues in news coverage but the correlation of such cues with public opinion survey data. Their research demonstrates that citizens extract cues from news content, thus informing their perceptions and preferences for policy changes.57 Another group of researchers, using Young and Soroka’s 2012 Lexicoder Sentiment Dictionary, proposed an automated dictionary-based content analysis to code for policy topics in both English and Dutch political texts. They validate their dictionary through combining data from the Prime Minister’s Question Time (from the United Kingdom) dataset and the State of the Union speeches (from the United States) for the English dictionary, then utilizing human coders for the codebook. They argue in favor of a dictionary-based approach since policy topics are relatively easily identified using a finite set of words. Their automated dictionaries produced valid, reliable, and comparable measures of policy agendas that correspond well with results from human coding. Burscher, Vliegenthart, and de Vreese also performed an automated sentiment analysis to test the validity of news frames in the field of nuclear power. In their 2015 study, they combined sentiment analysis with a cluster analysis to group articles according to their word use. They applied natural language processing to select parts of an article to capture frames, then clustered groups of articles based upon their framing. Compared with manual coding, they found that their method of combining cluster and sentiment analysis, was able to reliably identify and code emphasis frames. 58 Some researchers argue in favor of using supervised machine learning rather than dictionaries to code policy issues. Burscher, Vliegenthart, De Vreese warn against using dictionaries because of the reliance on a subjective human coder to classify words, which can lead to limited and biased outcomes.59 On the other hand, “computer-assisted methods premise that a word and a phrase always have exactly one meaning in every context” and it assumed that human coders are better able to detect various meanings.60 This demonstrates the benefits of combining both approaches to limit the drawbacks of both humans and automated analyses. Other scholars point out the shortcomings of dictionaries in the automated content analysis of political texts. For example, Grimmer and Stewart note that applying dictionaries to content outside of the domain for which it was developed can yield inaccurate results. Additionally, explicitly validating dictionaries is difficult since it is hard for human coders to replicate the granular tone a dictionary can capture. For this reason, Grimmer and Stewart suggest sticking with measuring solely positive and negative affect or use validating methods similar to that of unsupervised machine learning methods.61 Further exposing the weaknesses in dictionary-based sentiment analysis, an analysis of five off-the-shelf sentiment tools in the context of Dutch economic news found wide divergence in terms of tone measurement. Additionally, the analysis revealed that a small tailor-made lexicon was not inferior to larger established dictionaries. The researchers conclude that combining individual dictionaries achieves stronger results. 62 This study heeds that advice and combines two dictionaries for more accurate results. Perhaps the greatest limitation of sentiment analysis is its “bag-of-words-approach” where words are evaluated independently, stripped from their grammatical structure and context. To mitigate the drawbacks of running a dictionary-based sentiment analysis, researchers in 2018 use a distributed word embedding approach validated through crowd coded training sentences. Word embeddings are a form of word representation where words are given vector values which represent its position within a space and words with similar meanings are mapped close to each other. The word-embeddings approach can also classify words that were not in the training text through assessing relationships to similar words. They find the word-embeddings approach has a higher accuracy than the bag-of-words approach and the ability to classify words not included in the training data was a major advantage. 63 While this research uses the bag-of-words approach, we attempt to mitigate its limitations by combining automated analysis with a hand-coded analysis. Applying Dictionaries for Climate Change Sentiment AnalysisOne of the most popular uses of automated sentiment analysis is to opinion mine social media posts—and a number of researchers have used automated sentiment analysis to assess opinions of climate change on Twitter: In 2019 Dahal, Kumar and Li investigated the nature of climate change discussion on Twitter, across different countries, over time. They also analyzed what topics were most prevalent. Using two dictionaries, they found that the overall discussion was negative toward the issue of climate change, specifically “when users are reacting to political or extreme weather events.”64 Another study of climate change discussion on Twitter surrounding the 2015 UN Climate Change Conference used automated sentiment analysis to determine whether an account was personal or non-personal, “track the influence of user demographics on opinion and emotion expression towards climate topics, and the difference in emotion intensity shown by each account type.”65 A 2014 conference paper also used opinion mining on Twitter data to analyze perceptions of climate change. Researchers used subjective versus objective and positive versus negative classifiers. They reported significant variability in sentiment polarity over time but observed a “connection between short-term fluctuations in negative sentiments and major climate events…[and] found that major climate events can have a result in sudden change in sentiment polarity.66 Lastly, a 2015 research article analyzed all tweets containing the word “climate” from September 2008 and July 2014. Researchers utilized the Hedonometer sentiment analysis tool which uses a bag-of-words approach to calculate a happiness score for a large collection of text. Through analyzing tweets by specific dates, they found that “natural disasters, climate bills, and oil-drilling can contribute to a decrease in happiness while climate rallies, a book release, and a green ideas contest can contribute to an increase in happiness.” Moreover, they found more tweets responding to climate change news were from activists rather than climate deniers.67 While these studies are concerned with opinion mining to gauge public emotion and support of climate action, this research is concerned with the effect of the news messages which are integral to the formation of political beliefs. To the best of my knowledge, only one academic study has attempted to evaluate the affect of climate change media coverage using a dictionary-based approach. A 2017 conference paper performed a fully unsupervised, sentiment analysis of four UK newspapers’ coverage of climate change, using two sentiment analysis tools (Latent Dirichlet Allocation (LDA) and SentiWordNet). They found differences in main topics and negativity versus positivity across the four papers in a single year but caution that their results are based upon existing dictionary tools that preclude context and do not include all the terminology specific to climate change. They suggest that future work could incorporate co-training to improve the validity of the analysis as well as could extend to evaluate emotions expressed as opposed to measuring only positive and negative attitudes.68 While their research focused on UK newspapers, this research focuses on U.S. news. Additionally, though this study does not attempt co-training or a semi-supervised approach, it does broaden the analysis from polarity to detecting the actual emotions expressed. This research will aim to build a clearer picture of the affect present in newspaper coverage thereby influencing reader emotion and information processing — allowing us to posit potential influences and changes in public support for climate change mitigation policy. Outlet Selection and AudienceTo understand affect present in news coverage, I analyze climate articles from 11 different daily newspapers. The 11 outlets in our corpus were selected due to their wide time range of available articles on Factiva as well as regional and audience diversity. While there is not data available on the ideological makeup of most of the regional newspapers in our corpus, USA TODAY, The Wall Street Journal, The New York Times, and The Washington Post’s audiences have been analyzed. According to a 2014 analysis, 61 percent of The Washington Post’s audience and 65 percent of The New York Time’s audience has political values that are left of center – whereas 41 percent of The Wall Street Journal’s audience is left of center. On the other hand, just 19 percent of The Washington Post consumers and 12 percent of The New York Times consumers are mostly or consistently conservative, compared to 35 percent of The Wall Street Journal’s audience. Additionally, the Journal has the highest level of trust among mostly and consistent conservatives of any newspaper while The New York Times and The Washington Post are the most trusted print source among consistent liberals. USA TODAY’s audience is roughly evenly distributed across the ideological spectrum and had the highest level of trust of any newspaper among respondents with mixed ideological views (38 percent). 69 Research QuestionsRQ 1: What is the sentiment of climate change coverage? Does this vary by news outlet? Given the lack of research on this topic, RQ1 seeks to gain an understanding of the overall sentiment in each newspaper. While we do not have a clear sense of how sentiment will vary, I expect that sentiment conveyed in climate articles will be more negative in outlets with more conservative outlets given public opinion data showing conservatives are much less likely to believe human activity contributes a great deal to climate change, 70 and support climate change mitigation policy.71 RQ 2: Does the sentiment of climate change coverage vary over time? Does any variation correspond to major climate change events? To assess these questions, we will look at sentiment over time, compare the sentiment in coverage before and after key decisions related to the Paris Accords and evaluate the presence of discrete emotions over time. To understand if coverage differs significantly around these events, we will analyze our results across four time periods to assess differences in emotions and sentiment: 1: Before Paris Climate Accords (before 12/2015) 2: After Paris Climate Accords to before Trump announces intention to withdraw (12/2015 - 05/2017) 3: Intent to withdraw and formal notice to withdraw (06/2017 - 10/2019) 4: Notice to withdraw till the end of time range (11/2019 - 10/2020) RQ 3: What are the top emotions associated with each newspaper’s coverage of climate change? Similarly, to RQ1, RQ 3 seeks to evaluate the discrete emotions present in each newspaper’s coverage. Previous studies on emotion in political processing have shown discrete emotions generate different effects. The NRC dictionary will allow us to see the emotional mixes of anger, fear, anticipation, trust, surprise, sadness, joy, and disgust evoked in coverage across papers. RQ 4: Where fear is detected in high levels in coverage, is there also information that would promote one’s self-efficacy? Drawing on prior work that suggests the presence of efficacy information may be important in offsetting potential demobilizing effects of fear messaging, I analyzed The Wall Street Journal, and The Washington Post articles with the highest levels of fear as identified by the automated coding with the NRC dictionary. MethodologyOverviewThis paper takes advantage of both automated sentiment and emotion analysis and a content analysis using human coders to more closely examine articles that were identified as containing high levels of words invoking fear based on the automated analysis. It is first essential to understand how this study defines key terms. The broadest definition of this research is text mining which refers to the conversion of text data into high-quality information. One way to accomplish this is through Natural Language Processing (NLP). NLP is a field of artificial intelligence concerned with how to program computers to analyze and manipulate natural language text. Sentiment analysis is a type of natural language processing aimed at evaluating a piece of text and categorizing subjective information, such as emotion and sentiment. Sentiment analysis is often focused on determining the polarity (also referred to as valence) of a text (negative or positive) While this research measures the occurrence of positive and negatively charged words (which provides the sentiment score), it is also concerned with emotions such as anger, fear, and surprise, for which some automated dictionaries incorporate into their coding mechanisms. Coding and DictionariesThis study performs a dictionary-based sentiment analysis by evaluating the text “as a combination of its individual words and the sentiment content of the whole text as the sum of the sentiment content of the individual words.”72 The dictionaries or lexicons (for the purposes of this research, these terms will be used interchangeably) this study uses the Lexicoder Sentiment Dictionary and Tidy Text’s NRC. Tidy Text NRC Word-Emotion Association Lexicon (EmoLex) developed by Saif Mohammed and Peter Turney measures both polarity (negative and positive words) and the presence of eight basic emotions: anger, fear, anticipation, trust, surprise, sadness, joy, and disgust. The dictionary was constructed through manual crowdsourced annotations on Mechanical Turk and was validated “using some combination of crowdsourcing again, restaurant or movie reviews, or Twitter data.” 73The NRC dictionary has a total of 14,182 unigrams (words) in its dictionary.74 Since EmoLex was created for use in a general domain, and dictionaries have been shown to vary,75 it is important that are results are validated against another dictionary. 76 The second dictionary used is the Lexicoder Sentiment Dictionary (LSD). Developed specifically for use in political communication, the Lexicoder Sentiment Dictionary is a valence dictionary available in the Quanteda Package in the statistical software R. The LSD is a smaller dictionary, with 9,148 words. Unlike the NRC dictionary, the LSD does account for negation. For example, positive words that are preceded by a negating word, are separately assessed 77 and then can be combined with the total negative count, as was done in this study. Similarly, negative words that are preceded by a negating word, forming a double negative, were added into the positive sentiment category. It is expected that the LSD will provide higher accuracy given the specificity of the information it was trained with, however its lack of more granular analysis focusing on discrete emotions led to incorporation of the NRC dictionary into the research. To more closely examine coverage that invokes the key emotion of fear, we undertook a hand coding of whether fear was invoked with respect to climate change, whether that fear was with respect to the repercussions of climate change or the economic costs of mitigation, and whether information that would promote one’s feeling of efficacy or hope that something is being done to address climate change is present. For the latter, the categories include policy action, international agreement or action, corporate action, new technologies, activism, or other. This was accomplished through two hand-coders with an average agreement of 82 percent across the nine variables we assessed (see Appendix for codebook). Finally, in addition to allowing us to consider whether fear coverage may continue efficacy messages which the literature suggests may be important when fear messaging is present, the hand-coded analysis will also serve as another form of validation of dictionary performance. Forming the CorpusTo contribute to the newly developing field of news coverage and sentiment analysis, this research uses a sentiment analysis to evaluate the sentiment and emotions of 11 major U.S. newspapers: 1) The Washington Post 2) The New York Times 3) The Wall Street Journal 4) Boston Globe 5) Tampa Bay Times 6) St. Louis Post-Dispatch 7) Pittsburgh Post-Gazette 8) USA Today 9) Star Tribune 10) Philadelphia inquirer 11) Atlanta Journal-Constitution The corpus was formed using Factiva, with the search terms in the headline or lede being “Climate Change” or “Climate Crisis” or “Global Warming” from January 1, 2010 to October 1, 2020. Since this produces more than 13,000 articles, we sampled 20 percent of the articles from each outlet to form a more manageable corpus. Systematic random sampling was used to achieve a 20 percent sample of coverage in these 11 outlets. 78 After removing any letters to the editor, “quote of the days” corrections, cartoons, book reviews, sponsorship messages and any other articles that were not about climate change we were left with 2,017 articles across 11 outlets. These form the sample for our analysis. AnalysisOnce the articles were in text files organized by year, the information was imported into the statistical software R, where stop words such as articles, prepositions, and conjunctions were removed. Following the preprocessing, the sentiment scores (total number of positively charged words – total number of negatively charged words) for every individual article were produced. We also used the NRC dictionary to calculate the number of discrete emotion words (anger, anticipation, disgust, fear, joy, sadness, surprise, and trust) for each article. ResultsRQ 1: What is the sentiment of climate change coverage? Does this vary by news outlet? We assessed the sentiment of climate change coverage through both the NRC Emotion Lexicon and the Lexicoder Sentiment Dictionary (LSD). We created a net sentiment score for each dictionary from subtracting the negative words from the positive words. As shown in figure 1, across all outlets we see a normal distribution of sentiment scores from the LSD when examining climate change coverage. Scores range from -84 to 84, with more articles having net positive sentiment than net negative sentiment. 79 Our NRC dictionary produces a range of sentiment scores from -15 to 60, with a longer tail on the positive, upper range (Figure 2). To understand whether individual papers vary in the sentiment conveyed to readers in their coverage of climate, we look at the mean sentiment scores by paper. Using the NRC Emotion Lexicon, we found the mean sentiment scores ranged from 10.57 – 16.55 with the Wall Street Journal having the lowest sentiment or least positive coverage and the Tampa Bay Times having the most positive coverage. Using the Lexicoder Sentiment Dictionary, meant net sentiment scores ranged from 1.09 to 8.19 with the Wall Street Journal again having the least positive coverage and the Star Tribune having the most positive coverage (see Table 1 and Figures 3 & 4). While the dictionaries have different numerical ranges, outlets with relatively low or high sentiment scores for one dictionary, have roughly similarly low or high relative scores for the other dictionary (Pearson correlation for article net sentiment between the two dictionaries is .66). To better understand the meaning of these sentiment results, we show the proportion of articles from each outlet that are in the top or bottom tercile of all sentiment scores (Table 1, columns 4 -7). Approximately 40 percent of the articles from the Philadelphia Inquirer and Wall Street Journal are in the bottom tercile (more negative sentiment) using the Lexicoder Sentiment Dictionary, nearly twice the number of articles from the Star Tribute and The Tampa Bay Times that appear in the bottom tercile. More than 40 percent of the articles in The Star Tribune, The Boston Globe and The Tampa Bay Times are in the top tercile. The Wall Street Journal had by far the highest percentage of articles in the bottom tercile using the NRC dictionary (50 percent of WJS were among the most negative third of articles in the entire corpus). Looking at the NRC dictionary, The New York Times, The Boston Globe, the Tampa Bay Times and the Star Tribune have the greatest proportion of positive sentiment articles. Both the results from the LSD and the NRC reflect our repeated findings of the Globe having more positive coverage and the Journal having more negative coverage compared to the other outlets in our corpus. Using the Lexicoder Sentiment Dictionary, we find that the Boston Globe’s mean sentiment is significantly more positive than both the Post (P < 0.007) and the Journal (P < 0.000). The Star Tribune’s sentiment is also significantly more positive than the Wall Street Journal (P < 0.034).80